Getting Started with git Branching Strategies and dbt

Hi! We��’re Christine and Carol, Resident Architects at dbt Labs. Our day-to-day work is all about helping teams reach their technical and business-driven goals. Collaborating with a broad spectrum of customers ranging from scrappy startups to massive enterprises, we’ve gained valuable experience guiding teams to implement architecture which addresses their major pain points.

The information we’re about to share isn't just from our experiences - we frequently collaborate with other experts like Taylor Dunlap and Steve Dowling who have greatly contributed to the amalgamation of this guidance. Their work lies in being the critical bridge for teams between implementation and business outcomes, ultimately leading teams to align on a comprehensive technical vision through identification of problems and solutions.

Why are we here?

We help teams with dbt architecture, which encompasses the tools, processes and

configurations used to start developing and deploying with dbt. There’s a lot of

decision making that happens behind the scenes to standardize on these pieces -

much of which is informed by understanding what we want the development workflow

to look like. The focus on having the perfect workflow often gets teams

stuck in heaps of planning and endless conversations, which slows down or even

stops momentum on development. If you feel this, we’re hoping our guidance will

give you a great sense of comfort in taking steps to unblock development - even

when you don’t have everything figured out yet!

There are three major tools that play an important role in dbt development:

- A repository

Contains the code we want to change or deploy, along with tools for change management processes. - A data platform

Contains data for our inputs (loaded from other systems) and databases/schemas for our outputs, as well as permission management for data objects. - A dbt project

Helps us manage development and deployment processes of our code to our data platform (and other cool stuff!)

No matter how you end up defining your development workflow, these major steps are always present:

- Development: How teams make and test changes to code

- Quality Assurance: How teams ensure changes work and produce expected outputs

- Promotion: How teams move changes to the next stage

- Deployment: How teams surface changes to others

This article will be focusing mainly on the topic of git and your repository, how code corresponds to populating your data platform, and the common dbt configurations we implement to make this happen. We’ll also be pinning ourselves to the steps of the development workflow throughout.

Why focus on git?

Source control (and git in particular) is foundational to modern development with or without dbt. It facilitates collaboration between teams of any size and makes it easy to maintain oversight of the code changes in your project. Understanding these controlled processes and what code looks like at each step makes understanding how we need to configure our data platform and dbt much easier.

⭐️ How to “just get started” ⭐️

This article will be talking about git topics in depth — this will be helpful if your team is familiar with some of the options and needs help considering the tradeoffs. If you’re getting started for the first time and don’t have strong opinions, we recommend starting with Direct Promotion.

Direct Promotion is the foundation of all git branching strategies, works well with basic git knowledge, requires the least amount of provisioning, and can easily evolve into another strategy if or when your team needs it. We understand this recommendation can invoke some thoughts of “what if?”. We urge you to think about starting with direct promotion like getting a suit tailored. Your developers can wear it while you’re figuring out the adjustments, and this is a much more informative step forward because it allows us to see how the suit functions in motion — our resulting adjustments can be starkly different than what we thought we’d need when it was static.

The best part with ‘just getting started’ is that it’s not hard to change configurations in dbt for your git strategy later on (and we'll cover this), so don’t think of this as a critical decision that will that will result in months of breaking development for re-configuration if you don’t get it right immediately. Truly, changing your git strategy can be done in a matter of minutes in dbt Cloud.

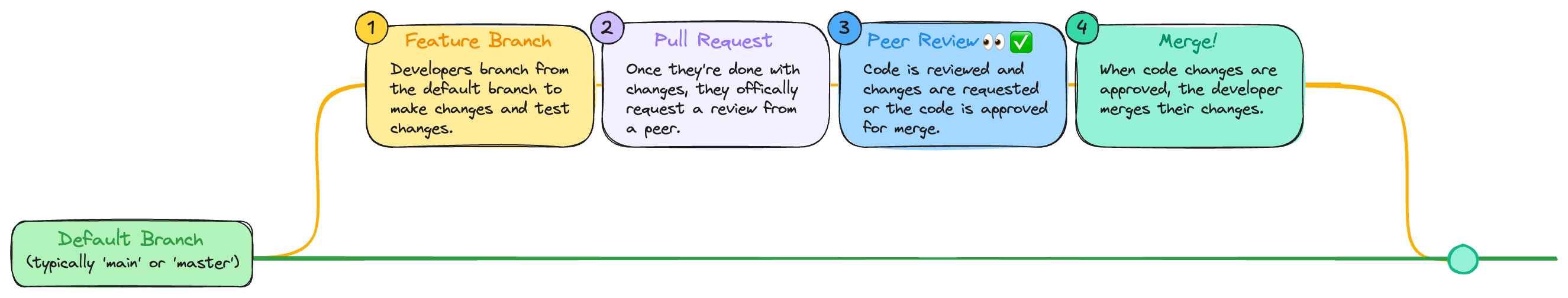

Branching Strategies

Once a repository has its initial commit, it always starts with one default

branch which is typically called main or master — we’ll be calling the

default branch main in our upcoming examples. The main branch is always the

final destination that we’re aiming to land our changes, and most often

corresponds to the term "production" - another term you'll see us use throughout.

How we want our workflow to look getting our changes from development to

main is the big discussion. Our process needs to consider all the steps in our

workflow: development, quality assurance, promotion, and deployment.

Branching Strategies define what this process looks like. We at dbt are not

reinventing the wheel - a number of common strategies have already been defined,

implemented, iterated on, and tested for at least a decade.

There are two major strategies that encompass all forms of branching strategies: Direct Promotion and Indirect Promotion. We’ll start by laying these two out simply:

- What is the strategy?

- How does the development workflow of the strategy look to a team?

- Which repository branching rules and helpers help us in this strategy?

- How do we commonly configure dbt Cloud for this strategy?

- How do branches and dbt processes map to our data platform with this strategy?

Then, we’ll end by comparing the strategies and covering some frequently asked questions.

There are many ways to configure each tool (especially dbt) to accomplish what you need. The upcoming strategy details were written intently to provide what we think are the minimal standards to get teams up and running quickly. These are starter configurations and practices which are easy to tweak and adjust later on. Expanding on these configurations is and exercise left to the reader!

Direct Promotion

Direct promotion means we only keep one long-lived branch

in our repository — in our case, main. Here’s the workflow for this strategy:

How does the development workflow look to a team?

Layout:

featureis the developer’s unique branch where task-related changes happenmainis the branch that contains our “production” version of code

Workflow:

- Development: I create a

featurebranch frommainto make, test, and personally review changes - Quality Assurance: I open a pull request comparing my

featureagainstmain, which is then reviewed by peers (required), stakeholders, or subject matter experts (SMEs). We highly recommend including stakeholders or SMEs for feedback during PR in this strategy because the next step changesmain. - Promotion: After all required approvals and checks, I merge my changes to

main - Deployment: Others can see and use my changes in

mainafter I merge andmainis deployed

Repository Branching Rules and Helpers

At a minimum, we like to set up:

- Branch protection on

main(like these settings for GitHub), requiring:- a pull request (no direct commits to

main) - pull requests must have at least 1 reviewer's approval

- a pull request (no direct commits to

- A PR template (such as our boiler-plate PR template) for

featurePRs againstmain

dbt Cloud Processes and Environments

Here’s our branching strategy again, but now with the dbt Cloud processes we want to incorporate:

In order to create the jobs in our diagram, we need dbt Cloud environments. Here are the common configurations for this setup:

| Environment Name | Environment Type | Deployment Type | Base Branch | Will handle… |

|---|---|---|---|---|

| Development | development | - | main | Operations done in the IDE (including creating feature branches) |

| Continuous Integration | deployment | General | main | A continuous integration job |

| Production | deployment | Production | main | A deployment job |

Data Platform Organization

Now we need to focus on where we want to build things in our data platform. For that, we need to set our database and schema settings on the environments. Here’s our diagram again, but now mapping how we want our objects to populate from our branches to our data platform:

Taking the table we created previously for our dbt Cloud environment, let's further map environment configurations to our data platform:

| Environment Name | Database | Schema |

|---|---|---|

| Development | development | User-specified in Profile Settings > Credentials |

| Continuous Integration | development | Any safe default, like dev_ci (it doesn’t even have to exist). The job we intend to set up will override the schema here anyway to denote the unique PR. |

| Production | production | analytics |

We are showing environment configurations here, but a default database will be set at the highest level in a connection (which is a required setting of an environment). Deployment environments can override a connection's database setting when needed.

Direct Promotion Example

In this example, Steve uses the term “QA” for defining the environment which builds the changed code from feature branch pull requests. This is equivalent to our ‘Continuous Integration’ environment — this is a great example of defining names which make the most sense for your team!

Indirect Promotion

Indirect Promotion introduces more steps of ownership, so this branching strategy works best when you can identify people who have a great understanding of git to handle branch management. Additionally, the time from development to production is lengthier due to the workload of these new steps, so it requires good project management. We expand more on this later, but it’s an important call out as this is where we see unprepared teams struggle most.

Indirect promotion adds other long-lived branches that derive from main.

The most simple version of indirect promotion is a two-trunk hierarchical structure

— this is the one we see implemented most commonly in indirect workflows.

Hierarchical promotion is promoting changes back the same way we derived the branches. Example:

- a middle branch is derived from

main - feature branches derive from the middle branch

- feature branches merge back to the middle branch

- the middle branch merges back to

main

Some common names for a middle branch as seen in the wild are:

qa: Quality Assuranceuat: User Acceptance Testingstagingorpreprod: Common software development terminology

We’ll be calling our middle branch qa from throughout the rest of this article.

Here’s the workflow for this strategy:

How does the development workflow look to a developer?

Changes from our direct promotion workflow are highlighted in blue.

Layout:

featureis the developer’s unique branch where task-related changes happen-

qacontains approved changes from developers’featurebranches, which will be merged to main and enter production together once additional testing is complete.qais always ahead ofmainin changes. mainis the branch that contains our “production” version of code

Workflow:

- Development: I create a

featurebranch fromqato make, test, and personally review changes - Quality Assurance: I open a pull request comparing my

featurebranch toqa, which is then reviewed by peers and optionally subject matter experts or stakeholders - Promotion: After all required approvals and checks, I can merge my changes to

qa -

Quality Assurance: SMEs or other stakeholders can review my changes in

qawhen I merge myfeature -

Promotion: Once QA specialists give their approval of

qa’s version of data, a release manager opens a pull request usingqa’s branch targetingmain(we define this as a “release”) - Deployment: Others can see and use my changes (and other’s changes) in

mainafterqais merged tomainandmainis deployed

Repository Branching Rules and Helpers

At a minimum, we like to set up:

- Branch protection on

mainandqa(like these settings for GitHub), requiring:- a pull request (no direct commits to

mainorqa) - pull requests must have at least 1 reviewer's approval

- a pull request (no direct commits to

- A PR template (such as our boiler-plate PR template) for

featurePRs againstqa - A PR template (such as our boiler-plate PR template for releases) for

qaPRs againstmain

dbt Cloud Processes and Environments

Here’s our branching strategy again, but now with the dbt Cloud processes we want to incorporate:

In order to create the jobs in our diagram, we need dbt Cloud environments. Here are the common configurations for this setup:

| Environment Name | Environment Type | Deployment Type | Base Branch | Will handle… |

|---|---|---|---|---|

| Development | development | - | qa | Operations done in the IDE (including creating feature branches) |

| Feature CI | deployment | General | qa | A continuous integration job |

| Quality Assurance | deployment | Staging | qa | A deployment job |

| Release CI | deployment | General | main | A continuous integration job |

| Production | deployment | Production | main | A deployment job |

Data Platform Organization

Now we need to focus on where we want to build things in our data platform. For that, we need to set our database and schema settings on the environments. There are two common setups for mapping code, but before we get in to those remember this note from direct promotion:

We are showing environment configurations here, but a default database will be set at the highest level in a connection (which is a required setting of an environment). Deployment environments can override a connection's database setting when needed.

-

Configuration 1: A 1:1 of

qaandmainassets In this pattern, the CI schemas are populated in a database outside of Production and QA. This is usually done to keep the databases aligned to what’s been merged on their corresponding branches. Here’s our diagram, now mapping to the data platform with this pattern:Here are our configurations for this pattern:

Environment Name Database Schema Development developmentUser-specified in Profile Settings > Credentials Feature CI developmentAny safe default, like dev_ci(it doesn’t even have to exist). The job we intend to set up will override the schema here anyway to denote the unique PR.Quality Assurance qaanalyticsRelease CI developmentA safe default Production productionanalytics -

Configuration 2: A reflection of the workflow initiative

In this pattern, the CI schemas populate in a

qadatabase because it’s a step in quality assurance. Here’s our diagram, now mapping to the data platform with this pattern: Indirect Promotion branches and how they relate to workflow initiative organization in the data platform

Indirect Promotion branches and how they relate to workflow initiative organization in the data platformHere are our configurations for this pattern:

Environment Name Database Schema Development developmentUser-specified in Profile Settings > Credentials Feature CI qaAny safe default, like dev_ci(it doesn’t even have to exist). The job we intend to set up will override the schema here anyway to denote the unique PR.Quality Assurance qaanalyticsRelease CI qaA safe default Production productionanalytics

Indirect Promotion Example

In this example, Steve uses the term “UAT” to define the automatic deployment of the middle branch and “QA” to define what’s built from feature branch pull requests. He also defines a database for each (with four databases total - one for development schemas, one for CI schemas, one for middle branch deployments, and one for production deployments) — we wanted to show you this example as it speaks to how configurable these processes are apart from our standard examples.

What did Indirect Promotion change?

You’ve probably noticed there is one overall theme of adding our additional branch, and that’s supporting our Quality Assurance initiative. Let’s break it down:

-

Development

While no one will be developing in the

qabranch itself, it does need a level of oversight just like afeaturebranch needs in order to stay in sync with its base branch. This is because a change now tomain(like a hotfix or accidental merge) won’t immediately flag ourfeaturebranches since they are based off ofqa's version of code. This branch needs to stay in sync with any change inmainfor this reason. -

Quality Assurance

There are now two places where quality can be reviewed (

featureandqa) before changes hit production.qais typically leveraged in at least one of these ways for more quality assurance work:- Testing and reviewing how end-to-end changes are performing over time

- Deploying the full image of the

qachanges to a centralized location. Some common reasons to deployqacode are:- Testing builds from environment-specific data sets (dynamic sources)

- Creating staging versions of workbooks in your BI tool.

This is most relevant when your BI tool doesn’t do well with changing underlying schemas. For instance, some tools have better controls for grabbing a production workbook for development, switching the underlying schema to a

dbt_cloud_pr_#schema, and reflecting those changes without breaking things. Other tools will break every column selection you have in your workbook, even if the structure is the same. For this reason, it is sometimes easier to create one “staging” version workbook and always point it to a database built from QA code - the changes then can always be reflected and reviewed from that workbook before the code changes in production. - For other folks who want to see or test changes, but aren’t personas that would be included in the review process.

For instance, you may have a subject matter expert reviewing and approving alongside developers, who understands the process of looking at

dbt_cloud_prschemas. However, if this person now communicates that they have just approved some changes with development to their teammates who will use those changes, the team might ask if there is a way they can also see the changes. Since the CI schema is dropped after merge, they would need to wait see this change in production if there is no process deploying the middle branch.

-

Promotion

There are now two places where code needs to be promoted:

- From

featuretoqaby a developer and peer (and optionally SMEs or stakeholders) - From

qatomainby a release manager and SMEs or stakeholders

Additionally, approved changes from feature branches are promoted together from

qa. - From

-

Deployment

There are now major branches code can be deployed from:

qa: The “working” version with changes,featuresmerge heremain: The “production” version

Due to our changes collecting on the

qabranch, our deployment process changes from continuous deployment (”streaming” changes tomainin direct promotion) to continuous delivery (”batched” changes tomain). Julia Schottenstein does a great job explaining the differences here.

Comparing Branching Strategies

Since most teams can make direct promotion work, we’ll list some key flags for when we start thinking about indirect promotion with a team:

- They speak about having a dedicated environment for a QA, UAT, staging, or pre-production work.

- They ask how they can test changes end-to-end and over time before things hit production.

- Their developers aren’t the same, or the only, folks who are checking data outputs for validity - even more so if the other folks are more familiar doing this validation work from other tools.

- Their different environments aren’t working with identical data. Like software environments, they may have limited or scrubbed versions of production data depending on the environment.

- They have a schedule in mind for making changes “public”, and want to hold features back from being seen or usable until then.

- They have very high-stakes data consumption.

If you fit any of these, you likely fit into an indirect promotion strategy.

Strengths and Weaknesses

We highly recommend that you choose your branching strategy based on which best supports your workflow needs over any perceived pros and cons — when these are put in the context of your team’s structure and technical skills, you’ll find some aren’t strengths or weaknesses at all!

-

Direct promotion

Strengths

- Much faster in terms of seeing changes - once the PR is merged and deployed, the changes are “in production”.

- Changes don’t get stuck in a middle branch that’s pending the acceptance of someone else’s validation on data output.

- Management is mainly distributed - every developer owns their own branch and ensuring it’s in sync with what’s in main.

- There’s no releases to worry about, so no extra processes to manage.

Weaknesses

- It can present challenges for testing changes end-to-end or over time. Our desire to build only modified and directly impacted models to reduce the amount of models executed in CI goes against the grain of full end-to-end testing, and our mechanism which executes only upon pull request or new commit won’t help us test over time.

- It can be more difficult for differing schedules or technical abilities when it comes to review. It’s essential in this strategy to include stakeholders or subject matter experts on pull requests before merge, because the next step is production. Additionally, some tools aren’t great at switching databases and schemas even if the shape of the data is the same. Constant breakage of reports for review can be too much overhead.

- It can be harder to test configurations or job changes before they hit production, especially if things function a bit differently in development.

- It can be harder to share code that works fully but isn’t a full reflection of a task. Changes need to be agreed upon to go to production so others can pull them in, otherwise developers need to know how to pull these in from other branches that aren’t main (and be aware of staying in sync or risk merge conflicts).

-

Indirect promotion

Strengths

- There’s a dedicated environment to test end-to-end changes over time.

- Data output can be reviewed either with a developer on PR or once things hit the middle branch.

- Review from other tools is much easier, because the middle branch tends to deploy to a centralized location. “Staging” reports can be set up to always refer to this location for reviewing changes, and processes for creating new reports can flow from staging to production.

- Configurations and job changes can be tested with production-like parameters before they actually hit production.

- There’s a dedicated environment to merge changes if you need them for shared development. Consumers of

mainwill be none-the-wiser about the things that developers do for ease of collaboration.

Weaknesses

- Changes can be slower to get to production due to the extra processes intended for the middle branch. In order to keep things moving, there should be someone (or a group of people) in place who fully own managing the changes, validation status, and release cycle.

- Changes that are valid can get stuck behind other changes that aren’t - having a good plan in place for how the team should handle this scenario is essential because conundrum can hold up getting things to production.

- There’s extra management of any new trunks, which will need ownership - without someone (or a group of people) who are knowledgeable, it can be confusing understanding what needs to be done how to do it when things get out of sync.

- Requires additional compute in the form of scheduled jobs in the qa environment as well as an additional CI job from qa > main

Further Enhancements

Once you have your basic configurations in place, you can further tweak your project by considering which other features will be helpful for your needs:

- Continuous Integration:

- Only running and testing changed models and their dependencies

- Using dbt clone to get a copy of large incrementals in CI

- Development and Deployment:

- Using schema configurations in the project to add more separation in a database

- Using database configurations in the project to switch databases for model builds

Frequently Asked git Questions

General

How do you prevent developers from changing specific files?

Code owners files can help tag appropriate reviewers when certain files or folders are changed

How do you execute other types of checks in the development workflow?

If you’re thinking about auto-formatting or linting code, you can implement this within your dbt project.

Other checks are usually implemented through git pipelines (such as GitHub Actions) to run when git events happen (such as checking that a branch name follows a pattern upon a pull request event).

How do you revert changes?

This is an action performed outside of dbt through git operations - however, we recommend instead using an immediate solution with git tags/releases until your code is fixed to your liking:

- Apply a git tag (an available feature on most git platforms) on the commit SHA that you want to roll back to

- Use the tag as your

custom branchon your production environment in dbt Cloud. Your jobs will now check out the code at this point in time. - Now you can work as normal. Fix things through the development workflow or have a knowledgeable person revert the changes through git, it doesn’t matter - production is pinned to the previous state until you change the custom branch back to main!

Indirect Promotion Specific

How do you make releases?

For our examples, a release is just a pull request to get changes into main from qa, opened from the git platform.

You should be aware that having the source branch as qa on your pull request will also incorporate any new merges to qa since you opened the pull request, until it’s merged. Because of this it’s important that the person opening a release is aware of what the latest changes were and when a job last ran to indicate the success of all the release’s changes. There are two options we like to implement to make this easier:

- A CI job for pull requests to

main- this will catch and rerun our CI job if there’s any new commits on ourqabranch - An on-merge job using our

qaenvironment. This will run a job any time someone merges. You may opt for this if you’d rather not wait on a CI pipeline to finish when you open a release. If this option is used, the latest job that ran should be successful and linked on the release’s PR.

Hierarchical promotion introduces changes that may not be ready for production yet, which holds up releases. How do you manage that?

The process of choosing specific commits to move to another branch is called Cherry Picking.

You may be tempted to change to a less standard branching strategy to avoid this - our colleague Grace Goheen has written some thoughts on this and provided examples - it’s a worthwhile read!

dbt does not perform cherry picking operations and needs to be done from a command line interface or your git platform’s user interface, if the option is available. We align with Grace on this one — not only does cherry picking require a very good understanding of git operations and the state of the branches, but when it isn’t done with care it introduces a host of other issues that can be hard to resolve. What we tend to see is that the CI processes we’ve exemplified instead shift what the definition of the first PR’s approval is - not only can it be approved for coding and syntax by a peer, but it can also be approved for it’s output by selecting from objects built within the CI schema. This eliminates a lot of the issues with code that can’t be merged to production.

We also implement other features that can help us omit offending models or introduce more quality:

- The

--excludecommand flag helps us omit building models in a job - The

enabledconfiguration helps us keep models from being executed in any job for a longer-term solution - Using contracts and versions helps alleviate breaking code changes between teams in dbt Mesh

- Unit tests and data tests, along with forming best practices around the minimum requirements for every model helps us continuously test our expectations (see dbt_meta_testing package)

- Using the dbt audit helper package or enabling advanced CI on our continuous integration jobs helps us understand the impacts our changes make to the original data set

If you are seeing a need to cherry-pick regularly, assessing your review and quality assurance processes and where they are happening in your pipeline can be very helpful in determining how you can avoid it.

What if a bad change made it all the way in to production?

The process of fixing main directly is called a hotfix. This needs to be done with git locally or with your git platform’s user interface because dbt’s IDE is based on the branch you set for your developer to base from (in our case, qa).

The pattern for hotfixes in hierarchical promotion looks like this:

Here’s how it’s typically performed:

- Create a branch from

main, test and review the fix - Open a PR to

main, get the fix approved, then merge. The fix is now live. - Check out

qa, andgit pullto ensure it’s up to date with what’s on the remote - Merge

mainintoqa:git merge main git pushthe changes back to the remote- At this point in our example, developers will be flagged in dbt Cloud’s IDE that there is a change on their base branch and can ”Pull from remote”. However, if you implement more than one middle branch you will need to continue resolving your branches hierarchically until you update the branch that developers base from.

What if we want to use more than one middle branch in our strategy?

In our experience, using more than one middle branch is rarely needed. The more steps you are away from main, the more hurdles you’ll need to jump through getting back to it. If your team isn’t properly equipped, this ends up putting a lot of overhead on development operations. For this reason, we don’t recommend more branches if you can help it. The teams who are successful with more trunks are built with plenty of folks who can properly dedicate the time and management to these processes.

This structure is mostly desired when there are requirements for using different versions data (i.e, scrubbed data) by different teams, but working with the same code changes. This structure allows each team to have a dedicated environment for deployments. Example:

- Developers work off of mocked data for their

featurebranches and merge toqafor end-to-end and over-time testing of all merged changes before releasing topreproduction. - Once

qais merged topreproduction, the underlying data being used switches to using scrubbed production data and other personas can start looking at and reviewing how this data is functioning before it hits production. - One

preproductionis merged tomain, the underlying data being used switches to production data sets.

This use case can be covered with a more simple branching strategy through the use of git tags and dbt environment variables to switch source data:

-

Indirect Promotion:

-

Direct Promotion:

No matter the reason for more branches, these points are always relevant to plan out:

- Can we accurately describe the use case of each branch?

- Who owns the oversight of any new branches?

- Who are the major players in the promotion process between each branch and what are they responsible for?

- Which major branches do we want dbt Cloud deployment jobs for?

- Which PR stages do we want continuous integration jobs on?

- Which major branch rules or PR templates do we need to add?

By answering these questions, you should be able to follow our same guidance from our examples for setting up your additional branches.

Direct Promotion Specific

We need a middle environment and don’t want to change our branching strategy! Is there any way to reflect what’s in development?

git releases/tags are a mechanism which help you label a specific commit SHA. Deployment environments in dbt Cloud can use these just like they can a custom branch. Teams will leverage this either to pin their environments to code at a certain point in time or to keep as a roll-back option if needed.

We can use the pinning method to create our middle environment. Example:

- We create a release tag,

v2, from our repository. - We specify

v2as our branch in our Production environment’s custom branch setting. Jobs using Production will now check out code atv2. - We set up an environment called “QA”, with the custom branch setting as

main. For the database and schema, we specify theqadatabase andanalyticsschema. Jobs created using this environment will check out code frommainand built it toqa.analytics.

How do we change from a direct promotion strategy to an indirect promotion strategy?

Here’s the additional setup steps in a nutshell - for more details be sure to read through the indirect promotion section:

- git Platform

- Create a new branch derived from

mainfor your middle branch. - Protect the branch with branch protection rules

- Create a new branch derived from

- dbt Cloud

- Development: Switch your environment to use the custom branch option and specify your new middle branch’s name. This will base developers off of the middle branch.

- Continous Integration: If you have an existing environment for this, ensure the custom branch is also changed to the middle branch’s name. This will change the CI job’s trigger to occur on pull requests to your middle branch.

At this point, your developers will be following the indirect promotion workflow and you can continue working on things in the background. You may still need to set up a database, database permissions, environments, deployment jobs, etc. Here is a short checklist to help you out! Refer back to our section on indirect promotion for many more details:

- Decide if you want to deploy your middle branch’s code. If so:

-

If needed, create the database where the objects will build

-

Set up a service and give it all the proper permissions. For example, if that will be in a database,

the service account should have full access to create and modify the contents within this database. It should also have select-only access to raw data.

-

Set up an environment for your middle branch in dbt Cloud, being sure to connect it to the location you want your deployments to build in.

-

Set up any deployment jobs using your middle branch’s environment

-

- Decide if you want CI on release pull requests (from your middle branch to main). If so:

- Set up an environment called “Release CI”

- Set up the continuous integration job using the “Release CI” environment